Process Large Sequence Efficiently

Learn Python generator expressions to process large datasets memory-efficiently. Unlike list comprehensions that build entire lists in memory, generators produce values on-demand, making them ideal for processing large or infinite sequences.

Your task is to use generator expressions and the itertools module to create efficient data pipelines. This is crucial for processing large files or streams where loading everything into memory is impractical.

Example 1:

Input: numbers = range(1, 6)

Output: 55

Explanation: Sum of squares (1 + 4 + 9 + 16 + 25) using generator.

Example 2:

Input: numbers = [1, 2, 3, 4, 5]

Output: [1, 3, 5]

Explanation: Get first 3 odd numbers using generator.

Example 3:

Input: start=1, end=10

Output: 6

Explanation: Count even numbers in range without creating list.

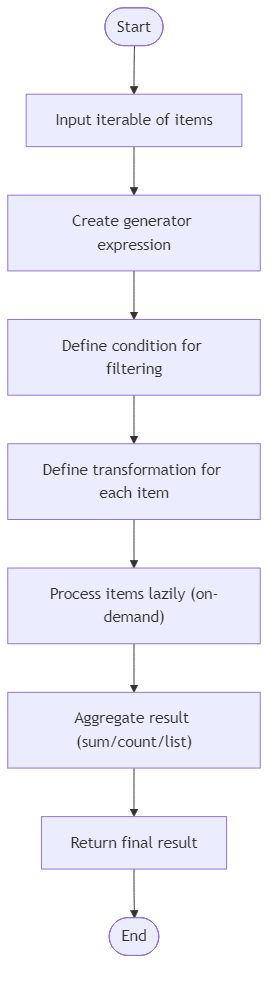

Algorithm Flow

Best Answers

def process_large_data(items):

for x in range(items):

yield x * xComments (0)

Join the Discussion

Share your thoughts, ask questions, or help others with this problem.